I recently published new scoped-arena crate.

It's my implementation of an arena allocator with explicit scopes to reduce total memory usage of the allocator. Additionally I've added built-in drop glue to properly destroy objects put into scope.

Intro

If you're already familiar with arena allocators (aka bump allocators), you can skip this part.

Memory allocators are the key ingredient behind dynamic memory usage. They are very simple on the surface. One function to allocate a memory range which can be used on user's discretion until deallocation function is called for that range.

There are many allocation strategies in the wild. One of them has huge limitations, but in return it is one of the fastest or maybe the fastest. After all sub and and instructions are pretty fast, and that's all that is required to allocate memory in an arena allocator. But how an allocation algorithm can be this simple? Let's see.

Imagine a block of memory. We need to give away a range of the memory from that block for each allocation request (consisting of size and alignment). There are only three requirements:

- Allocated range must not overlap with other allocated ranges, ranges that was already deallocated don't count.

- Range size must be at least the requested size

- Range start must respect memory alignment requirements

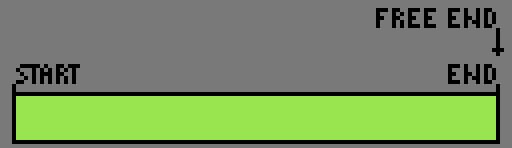

Now if allocator conceptually split memory block into two parts, one already allocated and another free, it would require only single integer to remember where one part ends and another starts. At first the whole memory block is free. Allocator stores end of the free part which equals end of the memory block.

Upon an allocation request the allocator subtracts the requested size from the free end and rounds down to align of the request - sub and and instructions. Now unless result is not before the start of the memory block (within the bounds), allocator updates the free end to the result and returns allocated range. And that's it.

Deallocation is no-op. The only option is to reuse the whole memory block when all allocated ranges are not in use anymore.

Now what do we do when the memory block is exhausted? There's multiple approaches. We'll discuss two of them.

- There's only one, possibly static, memory block. If it is exhausted, allocation simply fails

- Memory blocks are allocated from an underlying allocator. When one block is exhausted, new one is allocated

First approach is trivial. It is employed mainly when there is an upper bound on dynamic memory allocation. Memory block size is chosen to accomodate all expected allocation requests.

Second approach is mainly used to optimize allocations. For example in games an arena allocator can be employed to allocate temporary objects that are alive only during one frame simulation and rendering. Each frame an arena allocator can be reset and memory block reused. Another example would be web services, where lots of allocated memory only needed during a single request handling.

To reduce the number of memory blocks requested from underlying allocator, an arena would create next one larger than the previous. On reset all blocks except the last one are destroyed.

This way sufficiently large memory block will be eventually created and reused over and over, unless a new peak in memory consumption is reached.

Scopes

One problem of this method is that there's no way to reuse memory between resets. Repeated allocations will increase memory footprint even if previously allocated memory is not used when next allocation is performed.

What if at some point in the program we know that all memory allocated since another point is unused? The scope is exactly those two points. Scope is created at one point and destroyed at another. Scope can spawn sub-scopes that can't outlive its parent. Now when sub-scope is reset or destroyed, it is possible to reset memory block usage to the state it was when sub-scope was created. Sub-scopes may spawn their own sub-scopes.

If sub-scope created new memory blocks then only last of the new blocks should be reset. Parent scope will continue using that new block. Reusing last memory block of parent scope would've made allocation algorithm much slower.

On the root scope reset all blocks except the last one are destroyed. After a warm up period the only memory block left would be large enough to serve all necessary allocations.

Thanks to memory reuse by sub-scopes it is possible to reduce total memory consumption by orders of magnitude. In my use case — ECS based game engine — each system introduces a sub-scope as long as temporary objects need not to outlive a single system run. So total memory consumption is not cumulative. Systems that use a lot of arena memory may create sub-scopes from their own scope to further reduce footprint.

Drops

About half of the time when I use an arena allocator, I just need a reference that will live longer than I need to borrow. For this I'd like to simply allocate memory from arena and place value there, preferably using a single safe method from the allocator. This works great for !Drop types. I never bother that values technically leak.

But what if a value needs to be dropped? I've been using bumpalo crate in the past and that problem bothered me a lot when I needed to extend a lifetime of a value, and the value suddenly started to contain types with Drop, which didn't get invoked by bumpalo, leading to memory or resource leaks.

This is why scoped-arena drops values that are put into a scope using to_scope method after the scope is reset.

Internally it holds a list of drops to be performed. Drop list node lives in the same memory range that is allocated by the arena for a value moved into a scope. Upon reset scope traverses the list and drops all the values.

It uses core::mem::needs_drop constant function to determine if drop overhead even required for the type.

Scoped arena also supports collecting iterators into slices in a scope. This is especially useful for dealing with APIs requiring slices (typical for FFI), and only one drop node will be used for the whole slice if needed at all.

Nightly

In order to use Scoped Arena as an allocator for std containers unstable Rust feature allocator_api is needed. This functionality is behind the crate's allocator_api feature. You'll need a nightly toolchain to enable it.

Safety

Thanks to Rust borrow checker Scoped Arena allocator is safe to use. Resetting scope requires mutable borrow which guarantees that all the allocations borrowed from the scope immutably are unused.

Comparison with bumpalo crate

This crate is kinda re-imagined bumpalo crate that I've been using a lot previously.

Here's few differences:

bumpaloprovides copy of some std types to use withbumpalo::Bumpwhileallocator_apiis still unstable. Currentlyscoped-arenalacks those. With unstableallocator_apifeature enabled standard containers can be used with allocators from both crates.Scope::to_scopeis the same asBump::allocexcept it will register drop-glue if it is required for the type.Bump::allocwill leak the value, which is not a problem for some use cases. DropingScopewith registered drop glue is naturally slower than without, but user pays only for actual drops that are rarely no-op.Bump::alloc_slice_fill_iterworks only onExactSizeIterator,Scope::to_scope_from_iterworks on any iterator, but will fail allocation if upper bound is too large orNone. Alsobumpalos version will panic if iterator lied, whilescoped-arena's version will return shorter slice if iterator ends earlier.Scope::to_scope_from_iteralso registers drop glue for the slice.Bumphas few more methods to fill allocated slice.bumpalohas no scopes.

Summary

Reducing memory overhead while allocating dynamic memory in hot path without performance penalty is now easier than ever.